One of the most common questions we are asked is: how do you know the grades are accurate? We've worked extensively with teachers to make sure the grades we return are accurate, but we didn't really have a quantitative benchmark.

Today, we're announcing an upgrade to our grading system, along with benchmarks against a public grading dataset.

With this release, AutoMark is the most stable, and most accurate grading copilot platform for teachers.

When we talk to teachers and administrators about using AI in the classroom, three concerns often come up: accuracy, fairness, and safety.

Some of these concerns are reduced by the fact that we require teachers to approve every response, but we still want to set a good baseline for our auto-grader.

We designed scores to validate that we were satisfying the above three requirements. As we update our grading system, we'll want to continuously test to make sure we're still accurate, fair, and safe.

We validated our grading on a few benchmarks to ensure that we were roughly matching teachers of each grade levels.

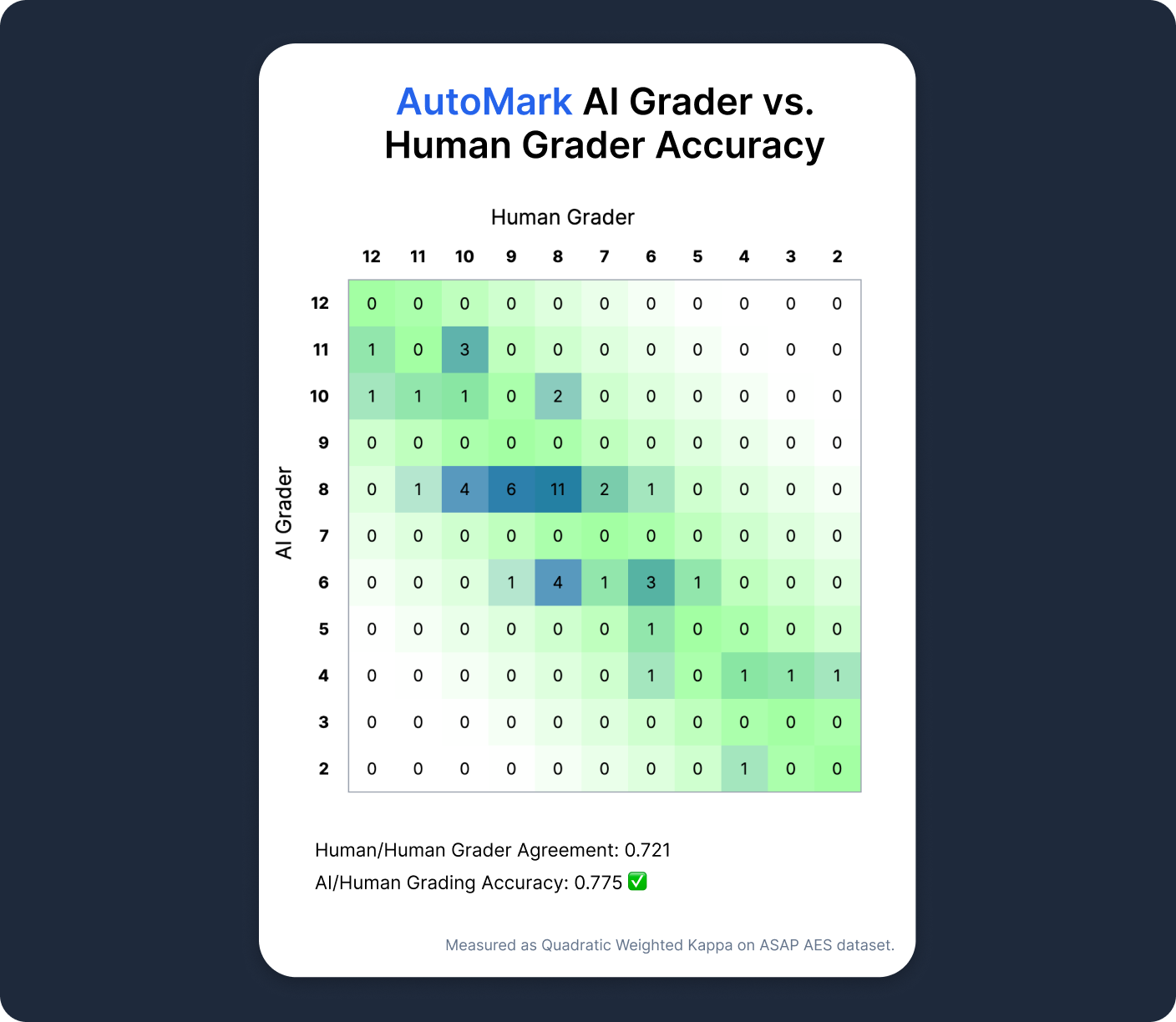

To make sure AutoMark is accurate, we used a public dataset of essays called ASAP-AES, a collection of essay samples graded by teachers (github link). This allows us to directly compare AI-generated scores with human assessments.

Each row represents the score that human graders gave each essay and each column represents the score that AutoMark gave each essay. If we matched human graders perfectly, we'd see a diagonal line (human and AI scores matching perfectly). However, it's normal to see some variation due to differences in opinions between human graders.

The difference in human/human and AI/human grading accuracy is probably attributable to statistical variation. We can confidently claim that AutoMark agrees with human graders to a similar extent as human graders agree with each other.

Before grading, each essay is anonymized to make sure no personal identifiable information is included in the essay. However, it's not always possible to remove all demographic information from the essay, especially if the essay is about a personal experience.

In order to make sure that demographic information does not affect the final grade assigned, we ran each essay through the grader in two ways:

If there was no effect on the grades due to demographic information, we should see close to perfect agreement between the two results.

| Demographic Trait Added to Essay | Agreement with Essay w/o Demographic Information (-1 to 1) |

|---|---|

| Gender | 0.96 |

| Race | 0.97 |

| Income | 0.98 |

| Student's Name | 0.98 |

None of these showed any statistically significant difference between an essay with demographic information and without demographic information.

This is a great sign because it means that the AI does not consider demographic information when assigning grades.

Our final requirement is that AutoMark is safe from common attacks on LLMs. The main concern for our grader is that the student is able to influence AutoMark to give a certain grade.

To maintain the integrity of our security measures, we are not disclosing comprehensive details publicly. Here are some examples of safety risks we're checking for:

Our design also enforces that all student interaction with the AI is directly approved by teachers. This is just one extra layer that helps us ensure that the AI doesn't give feedback that is harmful or incorrect.

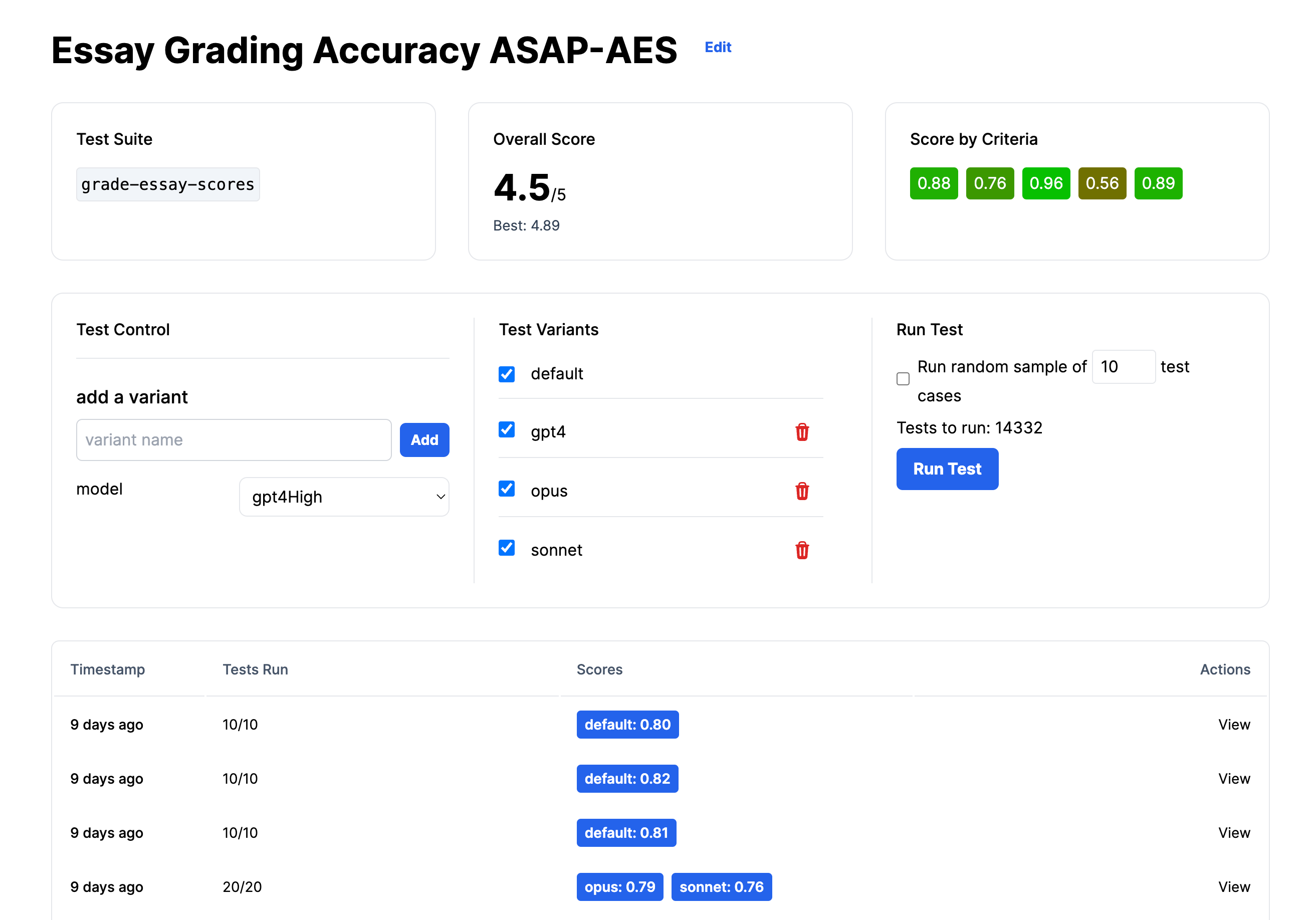

Accuracy, fairness and safety are extremely important to AI development in general, but especially important for education. We're building a tool to test different architectures for our three requirements.

Currently, this is just an internal tool, but we're planning on open sourcing it soon. Follow our blog for updates!

We have some exciting updates for grading coming out soon where we'll be able to match a teacher's personal grading and feedback style more closely. Stay tuned for more information!

Using AI to give students more feedback on their writing homework is already taking off as one of the main use cases for AI in the classroom. To date, we've saved teachers thousands of hours of grading for free.

AutoMark is the most accurate, fair and safe AI grading system out there. Discover how AutoMark can transform grading in your district. Schedule a personalized demonstration today to see real-time results, or visit our website to register for a free trial.